Fetching Data From One Iflow Via Process Direct And Invoking A Loop Process Call With Exception Handling

WRITTEN BY

14th June 2022

INTEGRATION

Introduction

Hello

This is my first blog Post where I have been tried to describe a real time scenario of CPI where we have to call the iflow followed by another iflow and spilt the data and fetch it with looping process call. The exception messages will be stored in the data store (with global variable) which will send the receiver the mail also. The receiver also wants to maintain the log id which will be maintained in the Local integration process.

The key to the solution is The Process Direct adapter which can be used to establish communication between integration processes within the same tenant. A Looping Process Call in Cloud Integration that invokes a Local Integration Process iteratively till the conditions are met.

Pre-Requisite:

- 1. Access to SAP BTP Cockpit

– Scenario

In this blog post, we will fetch the data from odata via Request Reply then send the data via Process Direct to another iflow. In the second iflow branch the data using Parallel Multicast then send one phase data to the Loop Process call where it is splitted and the Logger script has been added for checking the log ID after deployment.

The exception sub process has been added to store the data in the data store and the content modifier send the message to the receiver.

For simple example, I just create simple integration (Fig 1 & 2) just include timer and one Mail Receiver Adapter.

Fig 1 iflow-1 with Process direct Receiver

Fig 2 iflow-2 with Process direct Receiver

⦁ Details of Adapter and Pallets Configuration

⦁ iFlow-1

Odata Configuration (Fig 3)

Use any free Odata Service here I have used Northwind and select the entities as per your requirement.

Process Direct Receiver Adapter (Fig 4)

Redirect to the address given in sender iflow sender adapter (Process direct).

- iFlow-II

Content Modifier

Set the current timestamp in header value

${date:now:dd-MM-yyyy HH:mm}

Looping Process Call

A Looping Process Call in CPI invokes a Local Integration Process iteratively till the conditions are met. The loop has to be executed till the maximum number if iteration.

Local Integration Process

In Transaction Handling select the required calling Process.

General Splitter (Fig 5)

You can choose the Root node accordingly, here I have been select the Product.

Logger Script

Logger Script is here to check the Logs which we can check from the monitoring page.

import com.sap.gateway.ip.core.customdev.util.Message;

import java.util.HashMap;

def Message processData(Message message)

{

def body = message.getBody(java.lang.String) as String;

def messageLog = messageLogFactory.getMessageLog(message);

if(messageLog != null)

{

messageLog.setStringProperty("log1","Printing Payload As Attachment")

messageLog.addAttachmentAsString("log1",body,"text/plain");

}

return message;

}Exception Sub Process (Fig 6)

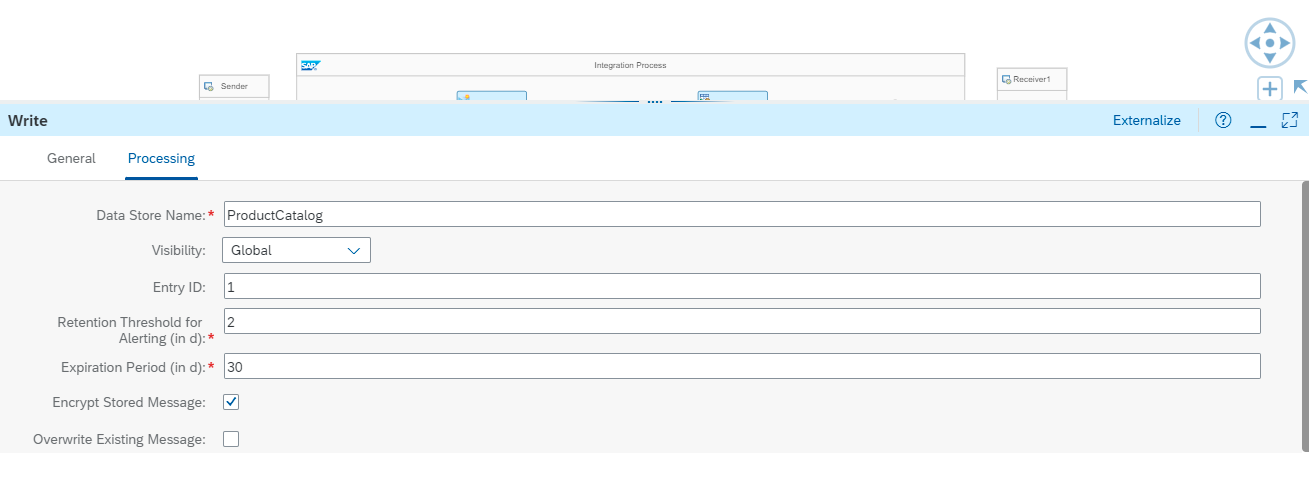

If the iflow has some error then it will go to data store through exception sub process and we can check there by fetching the data from a different iflow as we have set the Global Variable in Write variable option.

Content Modifier

Set the message in message Body to send the receiver.

Deployment (Fig 8)

After deployment the mail received as follows.

Conclusion

This blog will help you to connect two different iflow via Process direct and help to store the exception data in the data store which explain the use of Write variable and write operation. The process call also check the logs in the monitoring page by logger script.

Please like the blog post if you find the information useful. Also, please leave your thoughts and opinions in the comments section.

Good luck with your studies!…

References

1]https://blogs.sap.com/2020/01/09/sap-cloud-platform-integration-cpi-part-7-maintaining-logs-using-groovy-scripts-even-if-the-iflow-is-not-on-trace-mode./

2]https://blogs.sap.com/2021/09/16/sap-cloud-integration-cpi-hci-writing-groovy-scripts-_-with-basic-examples/

3]https://blogs.sap.com/2020/01/23/sap-cloud-platform-integration-cpi-part-11-how-to-use-a-local-integration-process/

Driving Innovation with SAP Build: AI-driven Extensions and App Development

Accelerate your digital transformation with the SAP Build AI-driven extension toolkit. As a core component of the SAP Build platform, these features empower developers to bypass traditional backlogs through visual tools and guided workflows. By leveraging SAP embedded AI capabilities, enterprises can rapidly deploy custom solutions while maintaining a clean core on SAP BTP. Choosing an SAP Build AI-driven extension strategy ensures faster time-to-production, allowing the SAP Build platform to bridge the gap between complex business needs and agile SAP Build AI-driven extension delivery.

End-to-End SAP BW to Datasphere Migration: Roadmap, Tools & Partner Strategies

Modern enterprises are evolving past rigid SAP business warehouse setups to embrace cloud agility. While a legacy SAP business warehouse provides structure, the shift from SAP BW to Datasphere is essential for AI readiness and scalability. Incture specializes in SAP BW to Datasphere transitions, offering a proven roadmap for your SAP datasphere implementation. By modernizing your SAP business warehouse, we help you reduce operational costs and build a future-ready Business Data Cloud.